I regularly spend time helping build and run insider risk programs for customer organizations. I’ve come to believe that regardless of standing regulations (or lack thereof), implementing pseudonymization is absolutely critical – not just to maintain the privacy of individuals, but to establish and safeguard the integrity of the insider risk function itself.

I’ll break down my thinking on this.

What you see, you cannot unsee

To get to the root of things, we have to talk about psychology and cognitive bias. I touched on the topic previously in a different context in May. Indeed, whenever you insert a human into a decision-making process involving other humans, the topic of bias becomes relevant. In everyday life, this is barely noticeable.

For instance, you walk into the company cafeteria. There are three tables. In one, there are people you don’t know too well. In the other, there’s the annoying bloke from IT who made a nasty joke at your expense at the company party. In the third, a couple of your good associates. You have five seconds to pick a table. Which one do you choose?

For many, it’s almost a rhetorical question. You go eat with your friends.

We treat people we like preferentially in social situations to people we don’t like. It’s human and usually perfectly fine.

This benign phenomenon becomes problematic when we consider that the lynchpin in insider risk investigations is also all about ultimately very similar human judgment calls.

Once we are exposed to a piece of information, we either consciously or unconsciously ingest it into our intuitive mental processes. Information, once obtained, is very hard or even impossible to entirely discard.

Compromised integrity?

Let’s consider the high-level flow of a typical insider risk investigation with Microsoft Purview Insider Risk Management tools.

In IRM, you build policies to automatically look for risky insider activities in various scenarios. After one or more policies have been configured, alerts are triggered according to pre-defined rules, then indicator activities and sequences are picked up and scored based on defined criteria. As a consequence, a risk level is determined for each alert, which helps human investigators focus on the highest-priority alerts first.

The first opportunity for bias affecting the integrity of investigations presents itself at this point. In real life situations, those tasked with investigating IRM alerts often have a very limited allotment of time from their work week to perform this role – an hour or two perhaps.

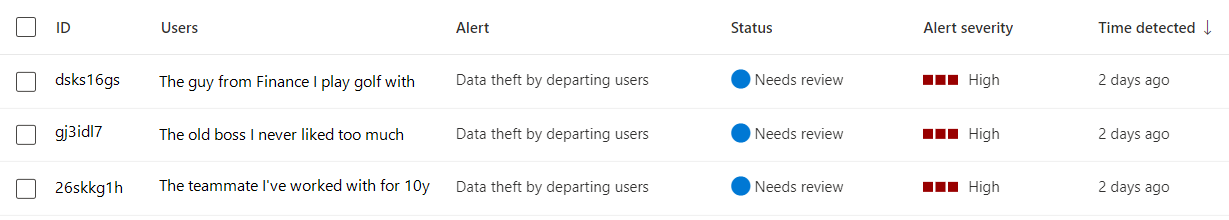

Let’s say the person responsible for triaging IRM alerts sees three high-severity ones (like those below) and only has the time to focus on one.

Which one are they most likely to pick and why? Would they potentially make the determination which alert to spend their time on without looking at the details of each alert thoroughly and fairly first?

I will make the claim that it is impossible to give people personally identifying information like this and not have it affect at least some insider risk investigations over time. Even perfectly well-intentioned attempts to account for personal negative biases can lead to over-correction and positive biases instead.

The stakes get higher if we progress further in the investigation process. If an alert is determined to be risky enough to warrant further scrutiny, it is normally attached to a case. A case can have one or more alerts from the same individual attached to it, allowing investigation in a broader context.

The ultimate outcome of an IRM case is a judgment of the individual’s intent – and the corresponding follow-up actions.

If an individual’s intent is interpreted as an honest accident or light negligence, the unpleasant consequences are likely to be far, far less severe than if malicious intent is identified. To make things worse, I suggest that the same set of activities can be interpreted in various ways, depending on the viewpoints of the investigators. It’s not an exact science.

This is the point where pseudonymization is at its most crucial. The key value should be “anonymous until proven guilty” – to avoid contaminating the process, an insider’s identity should only be revealed when all the necessary evidence has been collected and assessed.

Some suggestions

I recommend the following as key elements to any functional insider risk program using Microsoft Purview Insider Risk Management or other technical capabilities:

- Pseudonymization must be on by default, without exception.

- Pseudonymization must be protected and maintained until all possible evidence has been collected and evaluated in a case.

- There must be a clearly defined process and criteria for removing pseudonymization during a case. Assumed intent must be established and follow-up action recommendations submitted before an individual’s identity is revealed.

- If possible, before moving to reveal an individual’s identity, insider risk investigators should present the evidence and their judgment to a working group or small panel. If it is jointly agreed that the evidence is enough to move the case forward, the responsibility for consequences is transferred from the individual investigator to the organizational function.

If you have contrasting or supporting thoughts on pseudonymization, please let me know! 👋

That’s it for now – we’ll keep discussing insider risk more in the future.