Back in 2020-21 when the COVID-19 pandemic ushered in a new paradigm for work seemingly overnight, fears about employees abusing remote work to slack off rose to the surface. Last September’s Work Trend Index report from Microsoft highlighted this in a pretty stark way, saying 85% of leaders struggled to remain confident that employees were staying productive.

In some organizations, these fears were manifested as technical solutions intended to track employee activity, supposedly ensuring they stay on-task. Unfortunately (or.. fortunately?) this kind of adversarial approach seems to have turned out to be counterproductive.

Overtly breaking privacy protections to watch over employees’ shoulders incentivizes people to direct valuable brain power into ensuring Orwellian monitoring systems are happy with them, rather than actually focusing on producing quality work and taking care of their work-life balance.

Of course, none of this friction between supposedly procrastinating employees and those tasked with keeping them in check is new – and it has taken on humorous dimensions before.

For instance, anyone that happened to play Sierra adventure games (and others) back in the 90s might have seen the infamous Boss Key that hid the game screen in a flash and displayed boring “productivity” content like spreadsheets instead. Some games like Space Quest 3 actually made a joke of it.

I can’t comment on the effectiveness of the Boss Key but it wouldn’t make much sense in today’s world, even as a joke. If you were in the workforce back then and actually used it to keep your at-work gaming hidden, please let me know. 😉

Now in 2023, organizations have largely adjusted to hybrid work but we need to be careful to avoid stumbling into an another, critical mistake – one that is a result of our cloud-centric, “work from anywhere and on any device” world.

To put it concisely, we’re sitting on significant and rapidly growing estates of valuable data in the cloud and we aren’t doing enough to help people handle it responsibly. To avoid past mistakes, we need to be able to identify risky behavior but do it without compromising privacy.

When it comes to handling organizational data, we also need to be able to qualify detected risky behavior. I reckon you’ll find that most risks don’t arise from true malicious intent, but instead from things like negligence, lack of training and from a culture that doesn’t properly and positively reinforce secure working practices.

Attackers or insiders?

When talking about data security and insider risk, it’s a natural impulse to treat risky insiders like external attackers. Indeed, there’s very real overlap between how I would look to deal with both. It comes down to the classics: Identify the risk, protect your data, detect risky behavior.

The key differences start when we talk about how to respond and recover.

Let’s talk a bit about attackers and insiders.

Attackers

- Don’t initially have any legitimate access to your systems

- Primarily driven by financial, political or ideological goals

- Can cause widespread damage across systems and data

- Activities are often detectable because they are outside of the scope of an account’s typical activities

- Response measures are varied, strong and technical, like account closures, workstation isolation, firewall rules etc.

- The aim of the response is to prevent the attacker from maintaining unauthorized access to data, systems & networks – and to prevent damage and alterations to them.

Negligent risky insiders

- Negligent employees likely make up the bulk of your insider risk today – as much as 99% or even 99,9%+ of all risky insider events could be driven by negligence depending on the size and profile of your workforce.

- As employees, they already have legitimate access to your systems, which they need to.. you know, do their jobs.

- Negligent risky activities are driven by mundane reasons – time pressure, lack of technical know-how, lacking security culture at the workplace and, of course, personal indifference to organization policy.

- Risky actions include things like oversharing confidential documents, downloading business data to insecure external USB drives for convenience reasons, exposing internal information by discussing it in a public place and using work devices for private activities that inadvertently put the device’s security at risk.

- Risky insider actions are often detectable either because they are anomalous compared to the person’s typical baseline, to the typical baseline of their peers or to the baseline of the entire organization.

- In some cases, certain actions in and of themselves are enough to enable detection – for example, copying documents labeled Secret to a USB thumbdrive, which might explicitly be against established guidelines.

- The damage caused by negligent insiders is typically very localized and limited in scope, but can still be significant depending on the risk level and value of the data involved.

- Since negligent risky actions aren’t driven by malicious aims and the person is likely motivated to keep working for your organization, detected risks are typically remediated by softer options like security training and context-specific guidance (like Microsoft Purview DLP Policy Tips). Fostering a security-aware responsible workplace culture helps immensely.

- The privacy of negligent insiders should be protected as far as possible. To ensure we maintain employee trust, we should avoid treating people in need of training as attackers and threats. Failing to do so can create an adversarial environment in the workplace, which can even further feed insider risk. Nobody wants to feel like a liability.

- Negligent risky employees typically respond well to positive reinforcement and training.

Malicious risky insiders

- Malicious insiders aim to actively and knowingly circumvent company policy for personal gain.

- Like negligent insiders, they also have legitimate access to valuable information and systems.

- Identifying and preventing these cases is a core goal of an Insider Risk program, although you won’t typically see many of these each year – possibly (and hopefully!) no more than 0-5.

- Malicious insider activity can be driven by many motivators. Financial gain is one, but there are also others, such as:

- Personal grievances w/ others in the organization

- Ideological differences with the organization’s direction

- External coercion and blackmail

- Ego and desire for recognition

- In case of extreme disgruntlement, even sabotage and a desire to cause harm to the organization at large.

- A feeling of ownership of the work a person has done can also play a part:

- Imagine you spent months working on a complicated technical or process architecture, the completion of which you consider a professional milestone. You feel proud of the work you did.

- Soon afterwards you get laid off. You could well feel like you are entitled to take the work you spent so much effort on with you – even though any tangible content you created is actually usually the organization’s property.

- Malicious activities and intent are tricky to identify, but looking at sequences of events is a proven way to go about things. For example:

- Moving sensitive documents in a ZIP file isn’t that bad by itself, but..

- Downloading a stack of sensitive documents from SharePoint to your work computer <90d before your employment contract ends, then removing the sensitivity label from the files, packing them in a ZIP file and finally sending the archive to your private email address definitely is.

- When risky activity is identified as not simply negligent but malicious, the time to relinquish the strongest privacy protections is typically at hand.

- The response to a confirmed malicious insider depends on the nature of the risky actions they took, but it typically involves at least Legal, HR and possibly the authorities in some way.

- The aim of your response here is primarily to prevent loss of control over and damage to the integrity of valuable organizational data. (Securing the employee’s identity is important too, but not the primary overriding concern.)

The challenge of scale

Sifting through the immense amounts of available technical event logs for every internal user, while simultaneously maintaining privacy protections with an “innocent until proven guilty” approach is a daunting task. Additionally, without the use of some kind of AI-driven tooling, finding anomalies from each individual user’s unique baseline is borderline impossible at scale.

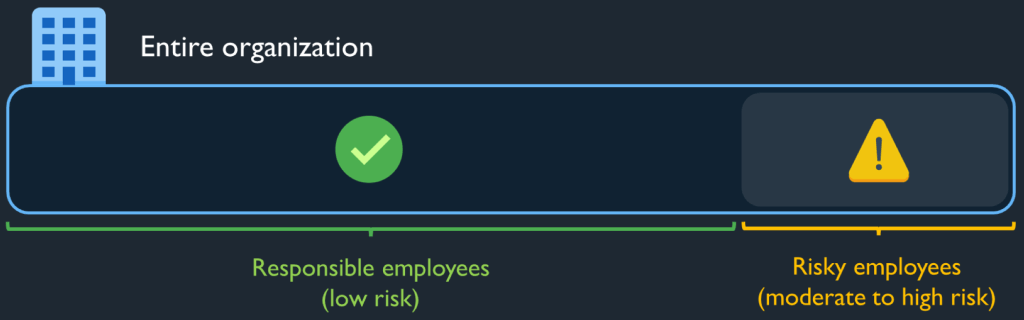

Even with AI helping you identify risky employees from the majority of responsible ones, you still need some help determining whether the actions taken are malicious or simply negligent. Here, proper tooling can help you paint a clearer picture, but human expertise and consideration is also required.

Luckily, the tooling exists to help with both of these tasks. Microsoft’s Insider Risk Management (part of the Microsoft 365 E5 and E5 Compliance licenses) can enable an insider risk team to scale their activities while providing the necessary and critical privacy protections.

Looking forward

If you want to start on the path to managing insider risk, ponder a few questions:

- Which data is most at risk and most valuable for us?

- Which groups of individuals pose the highest insider risk because of their role and/or privileges?

- What are the greatest risks that can manifest for your organization from a negligent or malicious insider event?

- Aside from IT and Legal, which other functions / roles in your organization would you involve in your insider risk program?

Going forward, I aim to continue discussing topics around insider risk in more detail, looking into tooling, processes, roles and responsibilities. The bottom line is this:

- There is no one-size-fits-all solution – and anyone offering one is probably trying to sell you something..

- ..but there are strong commonalities between successful approaches that we can learn from and look to incorporate.

The time to start building capabilities to discover and manage insider risk is now.

2 responses to “It’s time to start focusing on insider risk”

[…] In my previous blog I started laying out the case for why I think it’s time to start paying more attention to insider risk. […]

LikeLike

[…] It’s time to start focusing on insider risk – Seppala365.cloud […]

LikeLike