This article is part of a series discussing my experiences fine-tuning IRM policies. To start from the beginning, check out part 1.

Part 1

- The importance of accurate risk scoring

- Defining excluded, unallowed and 3rd party business domains

- Creation and use of detection groups to build indicator variants in place of or alongside built-in indicators

Part 2

- Choosing the relevant indicators for each scenario

- Regular fine-tuning passes for each policy’s indicator thresholds, based on analytics and other considerations

Part 3

- Creating policies based on priority user groups for high-value / high-risk roles & individuals

- Leveraging various risk score boosters

- Thoughtful use of priority content

- Use of cumulative exfiltration detection refined by peer groups through Entra ID enrichment & integration

- Configuring various types of global exclusions

- Undiscussed fine-tuning options

Priority user groups (PUGs)

Virtually every organization has one or more groups of employees who have an elevated level of access to sensitive information and associated risk compared to others – for example:

- C-levels & leadership

- R&D (Research & Development)

- M&A (Mergers & Acquisitions)

- Legal & Compliance

For these groups of individuals, the markedly higher associated risk can warrant setting up dedicated Insider Risk Management policies and other controls to help protect both the individuals and the business from financial and/or reputational damage through unauthorized data exfiltration or exposure. For large Insider Risk teams, you might also want to limit which investigators (possibly under extra-strict NDAs) can look into alerts from these high-risk users.

Priority user groups – or PUGs for all you abbreviation lovers – are the tool we can use to achieve all this.

PUG creation

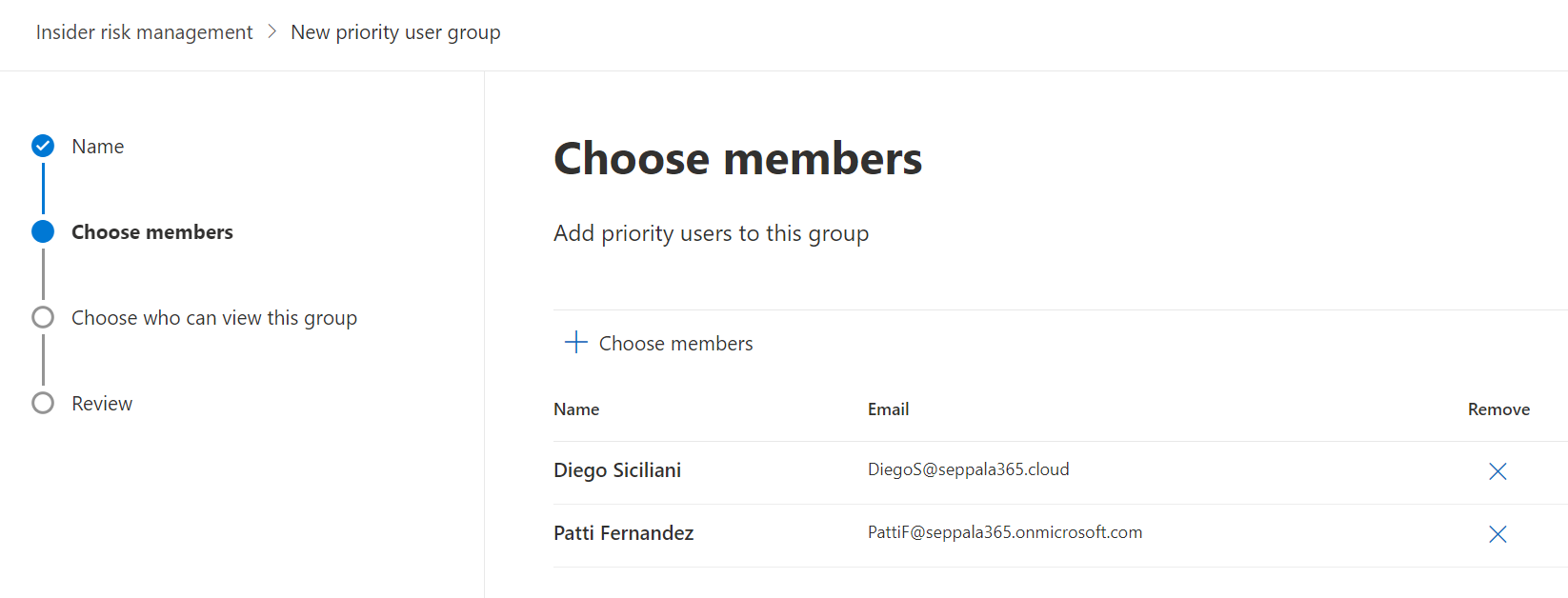

Creating a PUGs a simple three-step process: name the group and add a description, add members and finally choose which users can view data involving the users in the priority group.

It is that third and final step which requires your attention; this is actually a functionality similar to (but separate from) Entra ID Admin Units; you need to designate the users or IRM role groups that are allowed to see events in IRM related to users in the priority user group.

Defining who can view data on members of a priority group is a mandatory step. Luckily, you can still create practically unrestricted priority user groups by permitting the Insider Risk Management, Insider Risk Management Analysts and Insider Risk Management Investigators role groups to view events related to the priority user group.

I suggest only limiting access to events from a priority user group if this is supported by your real-life insider risk investigation process and role designations. Otherwise, you risk creating unplanned blind spots for your IRM team.

Scoping policies to PUGs

Once you have defined at least one priority user group, you can start creating special variants of certain IRM policies based on it. The two policy scenarios that currently support PUGs are:

- Data leaks by priority users, covering all kinds of intentional, negligent and accidental exfiltration and oversharing of sensitive data. This type of policy is triggered either by..

- Matches to a selected Data Loss Prevention policy

- Selected individual exfiltration activities (more common, will trigger the IRM policy often – although you should note that triggering IRM won’t necessarily lead to a visible alert.)

- Selected sequences of activities (almost certain to be of interest when triggered)

- Security policy violations by priority users, which triggers an IRM evaluation when possible tampering and defense evasion attempts are detected by priority users on workstations managed by Defender for Endpoint. I could see this type of event being especially useful for PUGs for privileged technical roles like IT administrators.

IRM alert fidelity is the key goal for any fine-tuning efforts. An additional benefit here is that PUG-specific policies can be fine-tuned separately from other org-level policies using all of the available policy-level tools. PUG membership itself can also be used as a reason to boost risk scores of alerts in any policy – see the next chapter on risk score boosters for more in this.

For policies scoped to priority groups, I usually look to lower certain alert thresholds and might also adjust priority content selection, depending on the nature of each PUG – generating 20-30% more benign alerts is far less of an issue in real life when we’re talking about a priority group policy for 10 people compared to an org-level policy scoped to 10 000 people. Insider Risk Management fine-tuning is all about managing scale.

Limitations

As of 5/2024 there are some important limitations to consider:

- For now, Priority User Groups can’t be based on Entra ID groups, dynamic or otherwise. Their membership is exclusively updated manually. I wouldn’t be surprised if improved Entra ID integration would show up in the future.

- IRM policies scoped to priority user groups don’t support Admin Units for ring-fencing visibility only to specific users for IRM analysts and investigators.

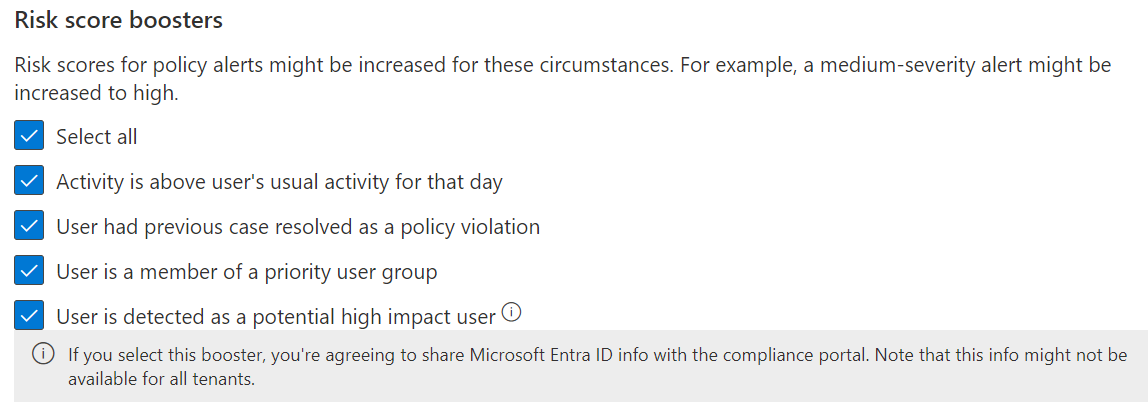

Risk score boosters

Risk score boosters are a special type of workload-agnostic, AI & ML driven indicator you can choose to include in an IRM policy.

As of 5/2024, there are four flavors of booster available. Two of them don’t have any special prerequisites or considerations:

- Activity is above user’s usual activity for that day: Clear-cut comparison to user’s own baseline. Very valuable for understanding anomalous behavior – and very tricky to do without AI-driven tools like IRM.

- User had previous case resolved as a policy violation: Allows increased risk score weighting for confirmed repeat offenders. “Fool me once, shame on you. Fool me twice, shame on me.”

The two other boosters have some prerequisites:

- User is a member of a priority user group: If you read the previous chapter, you’ll know what this is all about. Great for helping ensure alerts are raised for selected users with exceptional levels of sensitive data access and / or risk involved with their role.

- User is detected as a potential high impact user: Let’s take a while to understand what a “potential high impact user” actually means here. This is Microsoft’s way of saying the user’s Entra ID identity seems especially risky based on a few selected metrics reliant on Entra ID being properly enriched with HR data – the countless benefits of which I wrote about in detail earlier. A high impact user is someone who..

- Has an exceptionally high number of direct reports

- Handles more sensitive information (files with a sensitivity label high in the sensitivity label taxonomy priority order) than others

- Has a privileged role in Entra ID, like Compliance Administrator.

- Is on a high level in the organization’s hierarchy – for ex. any C-levels.

- Note: This booster does -not- apply a double layer of risk score elevation if the same user is also part of a priority user group.

I almost always include every available booster in IRM policies I configure. AI-driven contextual weighting of risk scores is what I see as one of the essential differentiators of IRM as a tool for triaging and managing insider risk.

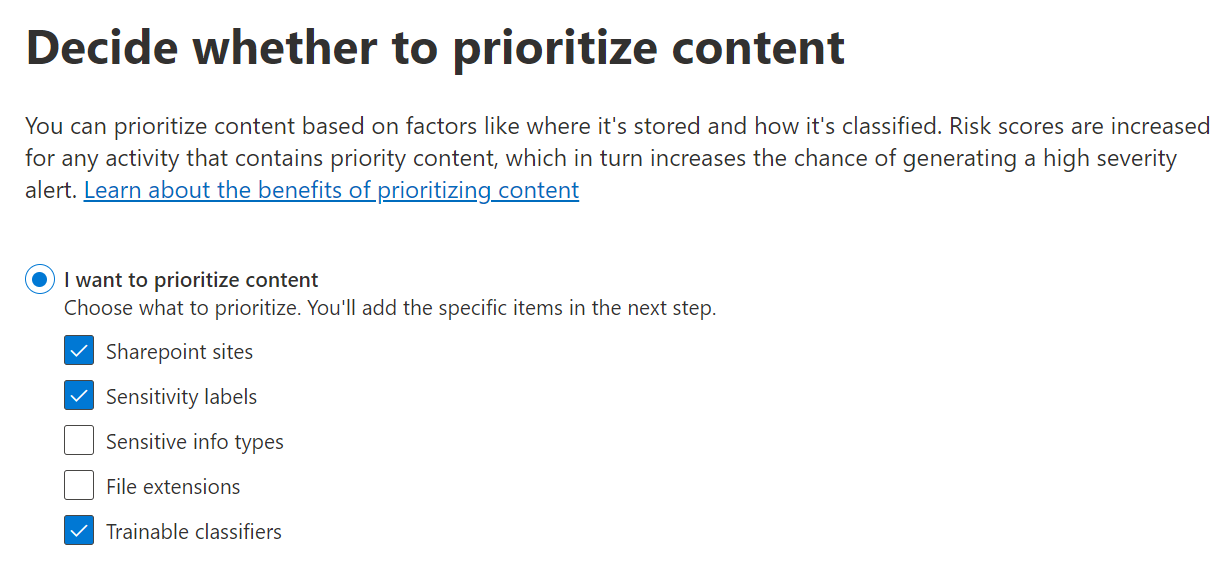

Priority content

Not all data is made equal, which is why we have tools like sensitivity labels in the first place – to separate the metaphorical nuggets of gold from all of the piles sand clogging up our Microsoft 365 services.

Insider Risk policies allow you to prioritize content of various types, granting events dealing with that type of content a higher-than-normal weight when assigning risk scores. As of 5/2024, you can prioritize the following:

- Individual SharePoint Online sites

- Content with specific sensitivity labels

- Content matching sensitive information types

- Specific file extensions

- Content matching one or more of up to five chosen trainable classifiers

Here are a few very common ways I tend to use priority content:

- I use Content Search and Defender for Cloud Apps File Policies to identify SharePoint sites with significant accumulations of specific types of sensitive information like personal data, credit card numbers and cleartext technical credentials. I then flag them as priority sites.

- I prioritize any higher-order sensitivity labels like Confidential and Secret.

- For policies scoped to a specific department, I prioritize ML-based trainable classifiers related to the work of that department. Let’s take C-levels – I would prioritize trainable classifiers like Business plan, M&A files, Sales and revenue or perhaps Agreements.

- If an organization has any custom sensitive information types, they often end up as prioritized content. Credit card numbers, personal data and technical credentials are also commonly favoured in policies I’ve worked with.

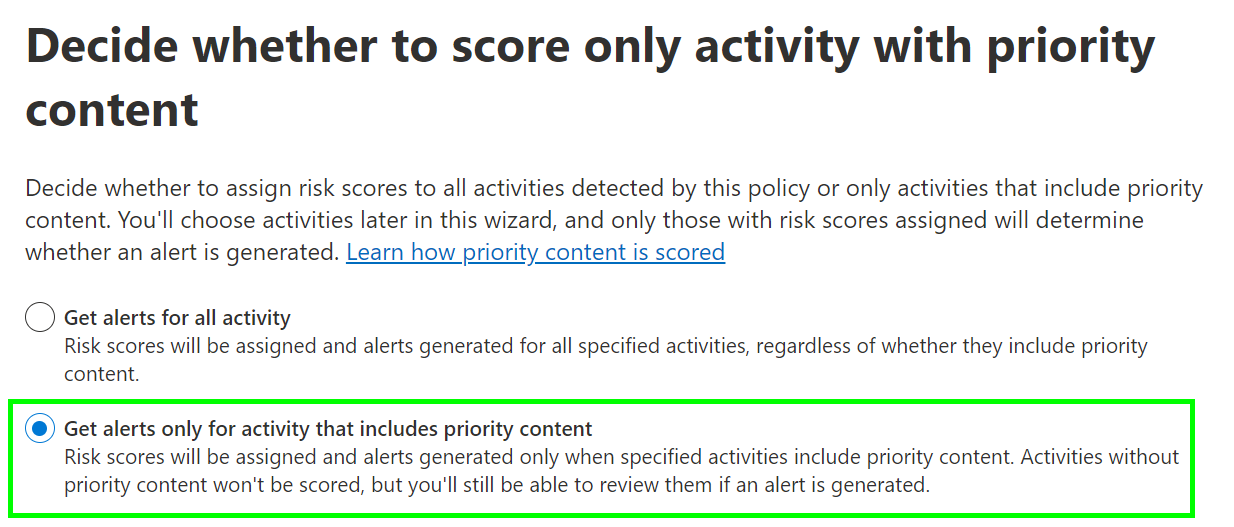

Also consider that you can set up IRM policies that only trigger when priority content is detected in trigger activities. I use such policies as an additional layer on top of broader and more inclusive “baseline” policies to help zoom in on high-value activities. This possibility enables creating highly targeted policies when combined with policies like Data leaks by priority users, described in the first chapter.

As an illustrative example, you can create policies by combining these two fine-tuning options which, in effect, could look for Secret or Confidential data leaks by C-level users – and nothing else.

Cumulative exfiltration detection (CED)

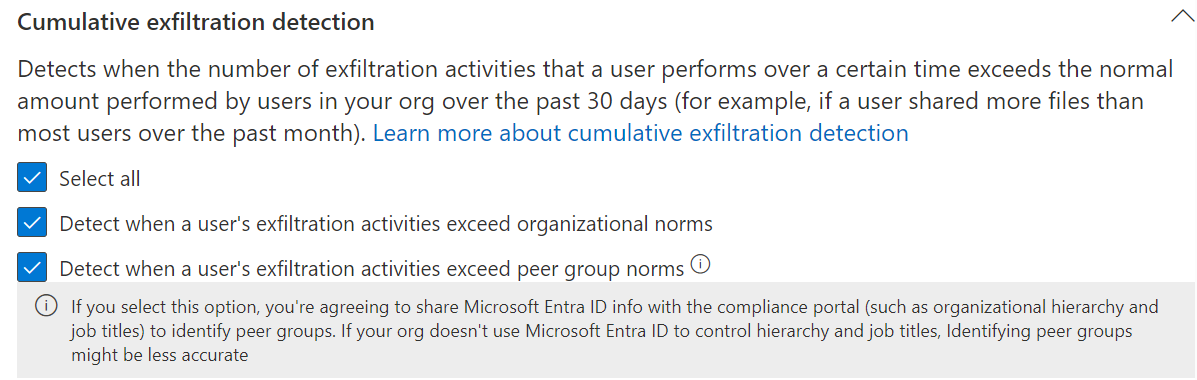

Similarly to risk score boosters, Cumulative Exfiltration Detection (CED) indicators are a special workload-agnostic and AI-driven option for elevating the risk scoring of a user’s activities. However, unlike risk score boosters, CED indicators compare a user’s activity to a rolling 30d baseline from other users in the organization.

CED indicators come in two flavors:

- User’s exfiltration activities exceed organizational norms: Requires no Entra ID enrichment and is available without any fuss. The net benefit of comparing people’s activities to absolutely everyone in the tenant is questionable in many cases since different roles need to handle data in so many different ways.

- User’s exfiltration activities exceed peer group norms: This option requires proper Entra ID enrichment to function correctly. What constitutes a peer group is important to understand here. The concept is based on a few factors:

- Organizational hierarchy means direct report structures in Entra ID. People under the same branch of direct reports can be considered a peer group.

- People sharing the same Entra ID job title also form a peer group. Your mileage may vary here depending on how consistently the organization uses titles to begin with. I’ve seen organizations with the equivalent of 10000 titles per 15000 users, which would obviously not help here.

- Interestingly, having access to shared SharePoint resources (like sites) is also considered a criterion for forming a peer group. I’ve never gotten more details on what the parameters here are – does the site need to be in active use and contain a minimum amount of data before it is considered?

Global exclusions

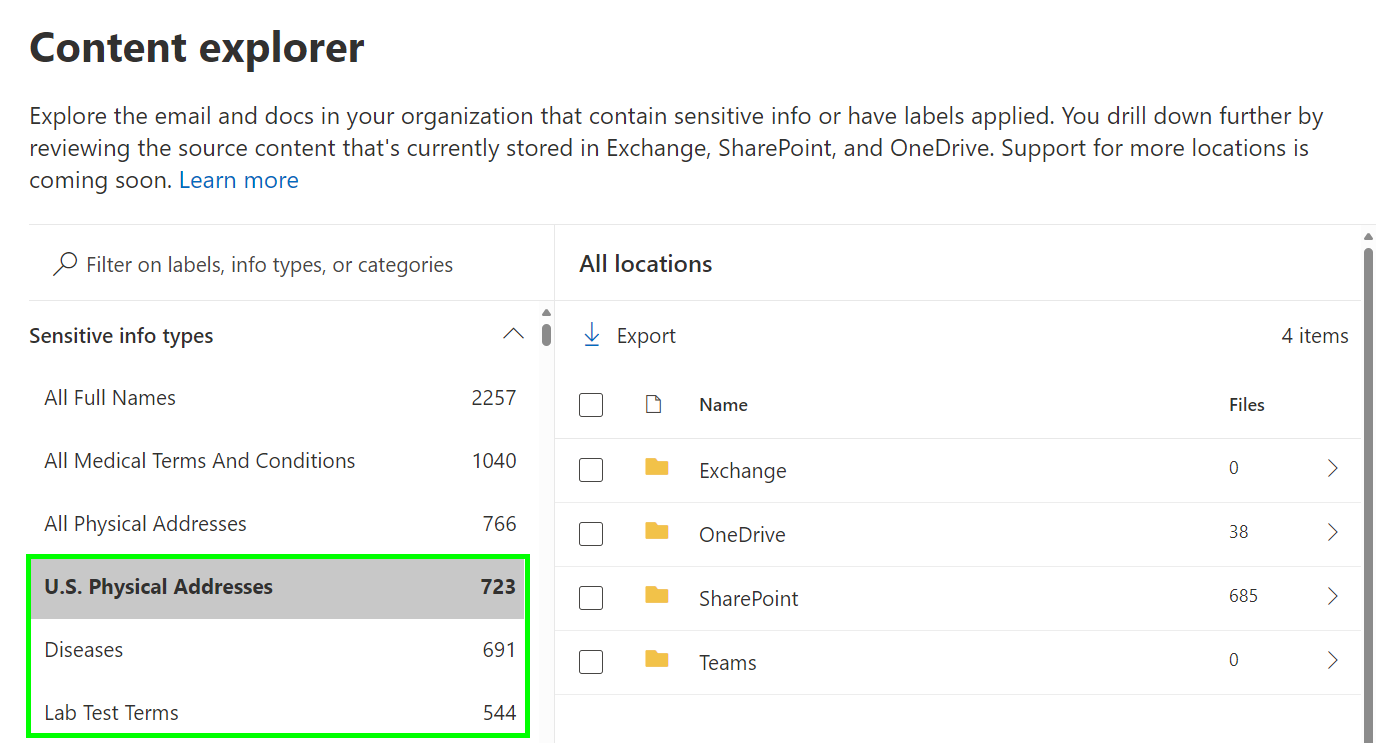

If you’re ever taken a peek in your Compliance portal’s Content explorer, you’ll probably have noticed that Microsoft automatically performs classification against Sensitive Information Types for all supported content stored in OneDrive, SharePoint, Teams and Exchange Online.

A lot of the time, those content classifications are either entirely irrelevant to your business or just plain wrong. For example, my demo tenant purportedly has 723 files containing U.S. Physical Addresses and 691 documents dealing with diseases. Most of these detections are false positives. This doesn’t really matter in passive tools, although it still bugs me that we don’t have a confidence level filter in Content explorer.

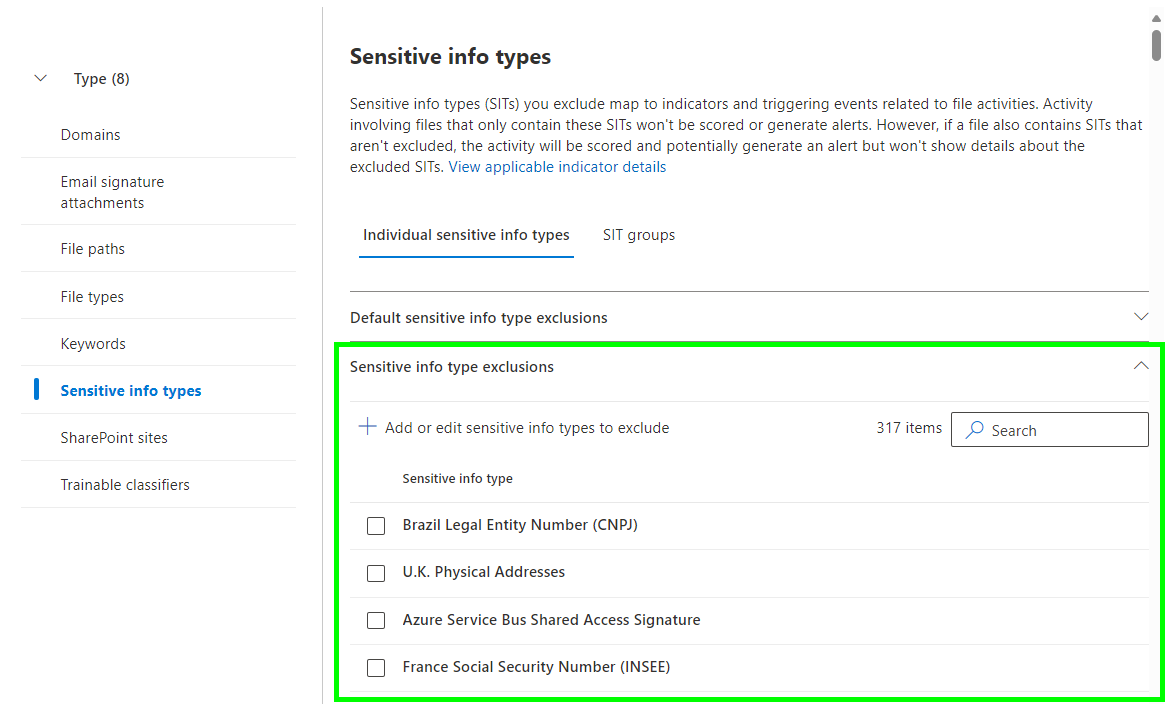

Where these business-irrelevant classifications start to matter is when they are factored into things like Insider Risk Management risk scoring. By default, IRM gives some weight to almost all built-in SITs, when in reality you might only consider a few of them to be relevant in investigations.

Same goes for many other pieces of information IRM evaluates: domains, file paths, file types, specific SharePoint sites, local and network share file paths, content with certain keywords (for ex. “private”), emails with signatures as attachments etc.

To help prevent irrelevant events from clogging up your IRM investigations, thoughtfully implementing exclusions across all of your policies is an essential step when fine-tuning IRM and a fitting topic to cap off this series for now.

Thanks to a fresh new IRM capability called global exclusions, we can now take action by excluding many different types of content, preventing uninteresting data points from affecting the assigned risk score of events.

I’ll go on record saying I absolutely love this one. For example, going forward I will likely look to globally exclude all sensitive information types except for the ones actually relevant to the organization I’m working with. No more alerts because of events related to exfiltration of Chile identity card detections or something in that vein! By the way. no disrespect to Chile – it’s just that in Finnish tenants, true positives for this example scenario are fairly rare.

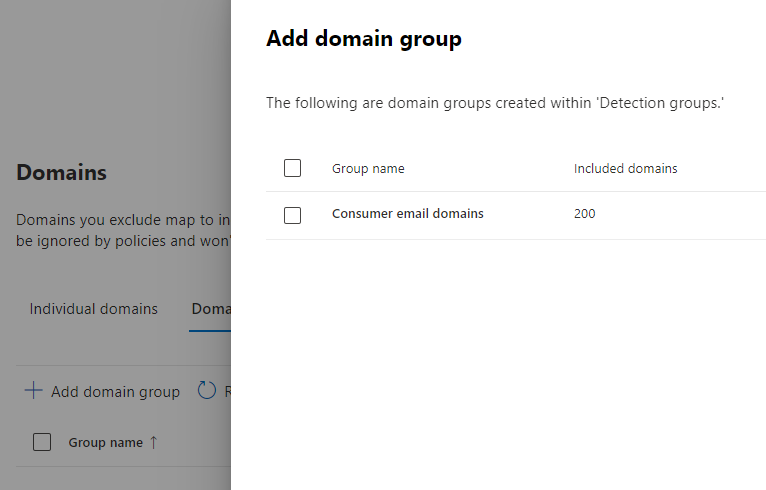

In the first part of this series, I detailed how detection groups are created and used to create variants of baseline indicators. The same groups you might have created to define indicator variants are also usable as global exclusions – pretty handy in some cases. Just take a peek under the groups tab and try adding a new group. Your existing detection groups should show up as options, like in the image below.

Undiscussed fine-tuning options

I have to mention that there are already a few more options available for fine-tuning IRM that I didn’t discuss in this series. This is because I like to have real-life experience on a capability before rattling on about it here – things in my blog need to be rooted in insights from work I do & not just best-guess vibes based on documentation and guesswork. (There’s always a bit of that sprinkled in of course.. 😉)

Notably:

- Custom indicators can be leveraged to bring in pretty much any signals from third-party systems that aren’t yet natively integrated with IRM.

- When a physical badging system is integrated with IRM, you can define priority physical assets like highly sensitive spaces for R&D, leadership, manufacturing etc. to boost risk scores of events generated by access attempts to those spaces.

Wrapping up..

For now, this article concludes my notes on fine-tuning Insider Risk Management. I’m fairly sure we’ll revisit the topic in the future once capabilities evolve and I get some more real-life experience under my belt.

I will end with the same sentiment I started this series on. Insider Risk Management, just like Sentinel and other SIEM tools, requires careful initial calibration to minimize distracting false positives and enhance the clarity of the investigation signal. Taking the time to perform this kind of tuning is vital for risk investigators to direct their attention to significant event efficiently and filter out the noise.

If the necessary groundwork for IRM is not meticulously executed, it can easily overwhelm investigation teams with inconsequential low-value alerts. On the other hand, if you do your homework and take the time to really optimize the tool for your organization’s needs, you’ll have a much easier time protecting the business from the reputational and financial consequences of actualized insider risks.

3 responses to “Fine-tuning Microsoft Purview Insider Risk Management – part 3”

[…] Creating policies based on priority user groups for high-value / high-risk roles & individuals […]

LikeLike

I’m trying to use a DLP Policy for Paste to Browser events. I’m seeing no results tho for the activity which seems unlikely .I tried with content contains a sensitive label or content contains a classifier but no results after two weeks. I’m hoping to use this in an IRM policy as my concern is users can copy and then paste IP to any source. Not looking to create a block. I just want to see it.

LikeLike

That’s interesting. A few questions:

* Which SIT are you looking for in the DLP rule(s) with paste protection enabled? I don’t think (not 100% about this) that sensitivity labels work with paste protection as it classifies the pasted content (text) itself and sensitivity labels are essentially a part of the metadata of the file or email the text comes from.

* Do you have Advanced Classification enabled in tenant-wide Endpoint DLP settings? It’s required in order for some classifiers to work properly.

* Are you using Edge with version 120+ and fulfill the other technical prerequisites? (Or Chrome / Firefox with the Purview extension installed?) It’s good to verify just in case: https://learn.microsoft.com/en-us/purview/endpoint-dlp-using?tabs=purview#restrict-pasting-content-into-a-browser

I’ve personally had no major issues so far silently auditing desired scenarios with paste protection so I’m wondering what the hiccup is in your case.

LikeLike